Anthropic has equipped its Claude AI chatbot with a new memory function, enabling it to retrieve and reference past conversations when explicitly prompted by users. Announced August 12, this feature marks Anthropic’s entry into the competitive arena of AI contextual recall, a capability rivals like OpenAI’s ChatGPT and Google’s Gemini have offered for months.

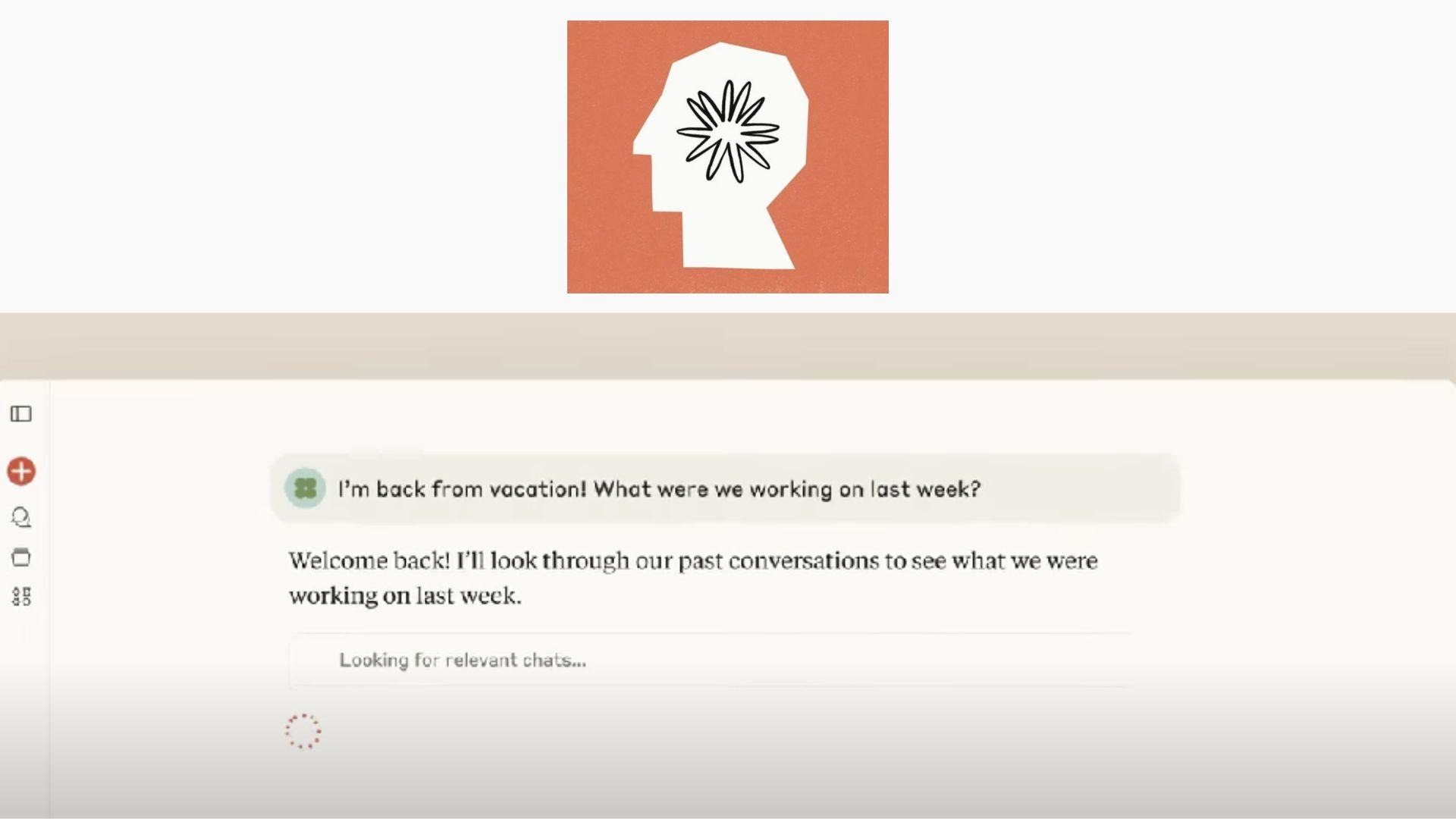

The functionality allows users to ask Claude to search previous chats for relevant details, summarize past discussions, or resume projects seamlessly. In a demo video, Claude successfully recalled a user’s pre-vacation work and offered to continue the project, declaring users would “never lose track of your work again”. Unlike ChatGPT’s persistent background memory, Claude’s recall is strictly opt-in and query-specific. It does not build long-term user profiles automatically, a distinction Anthropic emphasizes as privacy-forward.

Rollout Strategy and Access Limitations

Currently, the feature is available exclusively for Claude’s Max, Team, and Enterprise subscribers. Anthropic confirmed plans to extend access to Pro-tier users “soon,” but free-tier availability remains uncertain. Users can activate the tool via Settings > Profile > “Search and reference chats”.

The rollout strategy highlights Anthropic’s focus on monetizing high-value enterprise users first. Industry analysts note this contrasts with competitors: ChatGPT and Gemini offer similar features to free users. “Prioritizing paid tiers makes business sense but risks alienating casual users,” remarked Priya Kumar, an AI product strategist at TechInsights.

Technical Mechanics and Privacy Controls

Claude’s memory operates through targeted retrieval rather than continuous learning. When asked, it scans prior conversations within a specific workspace or project scope, then summarizes or extracts context without expanding active token usage. Users can disable the feature entirely or silo conversations by project (e.g., separating work and personal chats).

This contrasts sharply with ChatGPT’s approach, which automatically applies remembered details to new chats. Ryan Donegan, Anthropic’s spokesperson, clarified that Claude avoids “background persistence,” retrieving history only on demand.

User Concerns and Competitive Pressure

Despite enthusiasm, subscribers voice concerns about token consumption. Retrieving extensive chat histories might accelerate hitting Claude’s rate limit caps, Anthropic recently tightened after users exploited earlier policies, costing “tens of thousands of dollars”. The company has not yet clarified if memory searches impact token quotas.

The update arrives amid intensifying AI competition. Last week, OpenAI launched GPT-5, while Anthropic seeks funding at valuations up to $170 billion. Memory features boost “stickiness,” keeping users engaged within one AI ecosystem. However, they also ignite debates around privacy and emotional dependency, with some users reporting over-reliance on ChatGPT for mental health support.

Anthropic’s cautious, privacy-centric memory model caters to enterprises wary of data leaks. Yet its delayed entry gives rivals a head start in refining contextual AI. For now, Claude’s approach offers a middle ground: continuity without automation. As Kumar notes, “The real test is whether users see enough value here to choose Claude over more established alternatives.”

Subscribe to my whatsapp channel

Comments are closed.